I've often remarked that perception is far more important than reality, as we see proved time and time again through public and political life. Perception is what leads people like Beyoncé Knowles to walk out to a large, hyped press conference - as happened yesterday - and ask that people rise while she sings the national anthem, all to prove the point that she knows the words and the tune (it seems there was some controversy related to her lip-syncing during the recent Presidential Inauguration).

One area where perception is incredibly important is with regards to risks and risk management. Specifically, we in developed countries tend to "overestimate rare risks and underestimate common ones" (as noted by Bruce Schneier today). We can see the effects of such mistuned risk perceptions in the hasty, ill-conceived passing of laws like the USA-PATRIOT Act and the standing of the TSA (all to address the perceived risk of terrorism, despite terrorist incidents being extremely rare, and the subsequently losses of civil liberties hardly justified by the alleged benefits). We similarly see mistuned risk perceptions at play in the current gun control debate, which has visually targeted semi-automatic rifles with certain stylistic characteristics, intentionally conflating them with military-grade fully automatic firearms.

Many of these perceptions are driven by strong emotional responses and do not have much, if any, bearing in logical thought. Yet, by-in-large, this is how many policies are made, whether it by the NYSAFE regulation or the USA-PATRIOT Act or BYOD policies within your organization. It's why the vast majority of organizations still operate under a traditional perimeter-centric security model, despite seeing data and full-fledged computing devices walk in and out of their environments every business day. This is also why we see various health fads sweep the nation every year, as the media get hold of various studies and then use incredibly misleading language to imply causation, when there's really only even a tenuous correlated relationship (see: "Correlation Is Not Causation" by Dan Geer).

This line of thinking was spurred today after I watched this video of Professor David Spiegelhalter giving a public presentation at Cambridge University titled "Communicating risk and uncertainty." In it, he talks about various ways risk can be represented visually and in writing, as well as how uncertainty can be represented. One thing that he hits on starting around the 12-minute mark is that of looking at both the numerator and the denominator in a given probability or comparison (e.g., looking at a "1 out of 10" vs "10 out of 100"). It turns out, a couple interesting things happen.

First, something called "ratio bias" seems to occur, wherein people thing ratios with larger numbers are preferable to ratios using smaller numbers, even if the smaller-numbered ratio is actually more to their benefit (this effect is hotly debated). What this means for us in the professional world, however, is that we need to be very careful making comparisons between ratios that use different denominators.

This point leads into the second observation, which is that of "denominator neglect," wherein people focus almost exclusively on the numerator (the top number) and ignore the denominator (the bottom number) altogether. As Spiegelhalter points out, this happens in the news ALL the time as it is a common tool to punctuate a storyline, even if the use of the numbers is in fact exceedingly misleading. One need only think of the scare of the week for a ready example, whether it be shark attacks or gun violence or the misrepresentation of any number of medical studies.

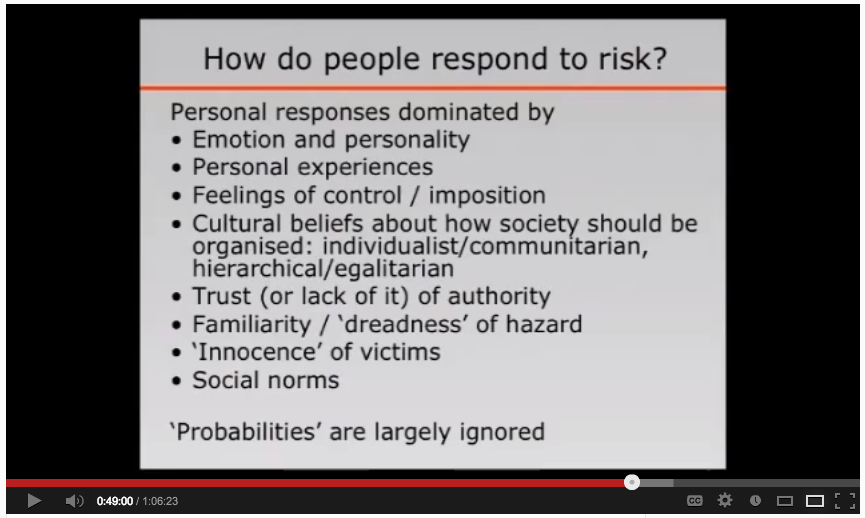

Perhaps his most interesting point comes at the end of his presentation, as shown in the screenshot below. In it he points out that the majority of responses people have to risk and uncertainty are based on social and emotional factors, and not on anything statistical or rational. Better yet, bottom line, people largely ignore probabilities.

One need only think about how this applies in the business world. How many IT/security risk management decisions are made based off of nothing more than fears or biases? On the flip side, how often do people take significant risks with data because they don't perceive or understand the scope of the risks involved? Spiegelhalter pretty much nails it in his summary... and none of those points help us make better decisions. All of this should then make you stop and wonder: How can we better manage perception of risks as part of our risk management programs? (answering this is best saved for another day and another post;)

Now, for something truly trippy... and on this topic of perceptions... I encourage you to check out this piece from NPR's Science Friday show:

Have a good weekend!