The future of security is that it shouldn't have a future; at least, not as its own dedicated profession. Rather, security is merely an attribute of operations or code, which is then reflected through appropriate risk management and governance oversight observations and functions. That we still have dedicated "security" functions belies the simple truth that creating separation ends up causing as many problems as it solves (if not more).

This is not a new line of thinking for me. Nearly 3 years ago I asked whether or not a security department was needed. At the time, I was working as a technical director of security for a SMB tech firm, and as the first dedicated security resource, I had concluded that building a team wasn't going to be fruitful. Rather, it made sense to jump right past the "dedicated security team" phase and go right to the desired end-state.

The Security Lifecycle

I've used this example anecdotally in talks over the past year, but I think it bears relaying here, too. The rise and maturity and "security" practices seems to follow a consistent lifecycle. While there is often a point where having a dedicated security resource or team is useful, there also seems to be a law of diminishing returns. More importantly, having dedicated resources seems to address short-term organizational deficiencies, rather than solving persistent, long-term problems.

In the beginning, we had IT, and it was by-and-large a dedicated, separate function. Out of this grew an environment of sheer hubris wherein business people came to the IT gods and supplicated, asking for resources and assistance. However, the walls of this sanctum began to disintegrate in the mid-90s with the rise of the Internet and the mainstreaming of PC technology. By the turn of the century, IT had become routinely accessible to everyone, and a few years later - thanks in large part to innovations from Apple - technology became demystified and essentially commonplace. No longer can IT personnel play the role of demigods demanding reverence and ritualistic sacrifice. The day of the Bastard Operator From Hell are essentially gone.

Similar to the rise and fall of the IT-as-demigods culture, so has security followed a similar trend. Out of the IT ranks arose dedicated security professionals - many of whom being extremely smart, cynical, and paranoid - who in turn treated IT folks the way that IT historically treated business peers (see Jimmy Fallon's portrayal of Nick Burns, Computer Guy). I'm sure many of you can think of multiple "ID-10-T" errors attributed to "stupid IT people" over the years?

As security resources emerged, it quickly became necessarily to consolidate them into dedicated teams or departments, oftentimes taking on an increasing amount of varied responsibilities ranging from operations to governance. Unfortunately, given the origins in IT, the majority of these teams remain(ed) buried in IT departments, or reporting up through CIOs, with a heavy operational focus (not that this is bad, per se - it just changes how "security" business is done and focused). Fast-forward to modern times, where much of security needs to be driven by risk management programs, and we quickly see a disconnect forming.

The natural evolution, which we've been seeing evolve over the past few years, is to get away from dedicated security teams almost altogether. The first target for transition is to remove operational security duties and dissolve them back into IT operations. After all, operations is operations is operations, and there's really no need to maintain duplicate staff or infrastructure. Second, there are typically a couple areas remaining: incident response and GRC. The incident response team can oftentimes remain in IT, close to the technology that it's supporting. This is logical and easy. However, GRC programs (and, here we're talking about GRC as a discipline, not as a tool or platform) cannot and should not remain buried in IT organizations. Instead, they need to be elevated into the business structure, oftentimes reporting in with other risk managers, such as under a General Counsel (Legal team), CFO, or business analysis team.

It seems to me that this is all widely held and accepted today. Many organizations have already moved to this end state where security-related operations have been dissolved back into IT departments/teams, with oversight functions elevated into GRC programs that pull together risk management, policy, audit, and various related testing and data analysis duties. However, if this is the case, then I wonder why it is that we're still talking about security? In fact, it strikes me as sadly ironic that the politicians and the federal government seems increasingly incensed with "cybersecurity" just as those duties return to ops. Instead of focusing there, where should they be focusing?

Supplanting Security With Reliability and GRC

Last month I heard the inimitable Dan Geer speak on the future of security at the Rocky Mountain Information Security Conference (RMISC) in Denver, CO. During his keynote he discussed the need to do away with "security" in favor of terms that better describe the desired outcome. In particular, he stated that he views security as being an attribute of reliability, and encouraged people to move to a mindset focused on reliability - which, incidentally, has strong roots in engineering - and get away from the sentiment-oriented term "security," which is often meaningless.

I think Geer's idea is excellent, and it further supports the lifecycle described above. Moreover, when it comes to defining metrics for IT operations, we already understand reliability and how to measure it. Sure, there are additional considerations related to the traditional cause of security, such as from all the additional data sources. But, for the most part, this is an easily understood concept. And, more importantly, focusing on reliability aligns with networked systems survivability and the imperative for business survival. Moreover, it also better aligns with risk management approaches, which are themselves focused not on eliminating all risk, but on managing risk within acceptable limits so as to optimize business performance.

This shift in approaches also helps address the question that Michael Santarcangelo recently asked in his CSO Online article "Is your definition of security holding you back?" We oftentimes have difficulties managing security because everyone is not on the same page, which in turn relates to the fact that we aren't generally speaking the same language. Dumping the confusing term of "security" (or "cybersecurity") in favor of reliability and GRC would go a long way toward resolving those concerns.

So, What's My Job?

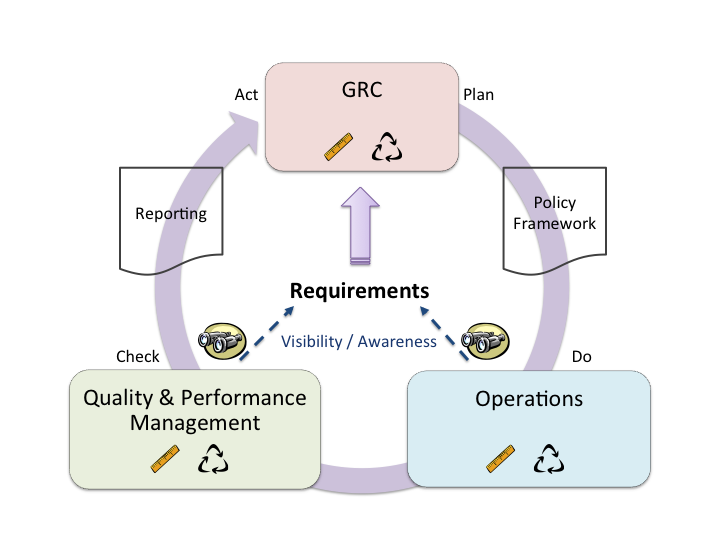

Making a wholesale change like this is not necessarily easy, but it is necessary. Thinking in terms of my TEAM Model, I think this necessitates a revision, which I believe changes the first two component areas to GRC and Operations (or IT Operations), as depicted below. In terms of the impact on organizational structure, this translates to having a strong operations team/department, elevating a GRC team into the business leadership to help with decision analysis and support, and enhancing quality and performance programs like audit, penetration testing, and code analysis, to have an equal say. At the end of the day, your job should fall under one of those buckets.

At the end of the day, this is not so much about "security" going away as a need, but it evolving and maturing into something more useful. As long as we continue talking about security, then we continue to perpetuate myths that are counter to our desired objectives. Instead, shifting to GRC and reliability as the core focus, we can then leverage well-established engineering practices, as well as help mature them to better account for all relative attributes. This imperative further solidifies when considering modern challenges like "big data" wherein we already have decent analytical islands, but still lack a consistent second-tier level of analysis to pull it all together. Operations teams can have the luxury of working with siloed analytics, but the business as a whole cannot, which necessitates an elevated GRC program that helps provide better quality data to decision-makers. All of these changes culminate in better-informed leaders who can then make decisions that are more defensible legally, which in turns helps improve the risk management posture of the business; a true win-win.

Just wanted to leave a quick comment to say we got rid of the central security team/department/silo about 5 years ago. Security staff are part of the departments which need security staff, so as Application Security Lead I'm part of the Product Management department with my peer in Operations being part of that team.

I'll be honest and tell you it's one of the best things we ever done!

SN

Brilliant. I've been saying similar things now for years. With the advent of virtualization, many of the roles that were in silos are compressed back into the umbrella of "IT". Security is left out. Show me IT guys who'll give root access to their virtualization environment to security guys except under duress?

Hoff made similar points in a recent blog article. I've been banging the drum now for 2+ years on security and IT needing to come together.

I hope more people start listening.

Thanks for writing this.

mike

Sorry close but no cigar.

The way to go is not via risk and reliability because of their fundemental underlying assumptions don't sit well with why security is an issue.

Reliability is based on the managment of a certain type of risk, and comes about via the ideas underpining the insurance industry and the mathmatical models they developed to measure their liability.

The fatal assumption is that although individual events happen at random, on a large enough population you get an aproximation against time that is sufficiently reliable to build a business model on.

Part of this occurs because of the "assumption of locality of tangible objects" that is if you are a thief stealing objects of worth you have to go where they are to steal the, and thus you cannot be somewhere else commiting another theft at the same time. Whilst this is true for physical tangible objects it is not true of intangible information objects.

What an information thief does is co-opt other peoples systems into giving a copy of the information object to the thief. As this co-opting is it's self done by information that has a near zero cost of duplication the theif can attack thousands of places automatically.

This allows for not just "1000year storm" level attacks but for thousands of thousand year level storm attacks to be carried out as near simultaniously as makes no difference in human terms of refrence. This is the "army of one" concept underpining the factual aspect of APT (the bit that's true after you strip away the political rhetoric of appropriations grabing FUD).

The risk models currently in use by and large cannot handle this sort of risk because of the assumptions underlying the models.

Befor people say this has not happened, I would argue we have come close with the faliure of the banking industry and more recently with the issues arising from high speed trading. They are clear warning signs that are current understanding of risk is wrong.

If you want to follow an engineerring model that will work go and examine Quality Systems, as I've been saying since the last century Security is a Quality issue and should be treated as such. Security should not start with the design of a product or the processes involved with the design of the product or for that matter the processes that control the processes that control the design but it should be a fundemental ethos that under pins the organisation along with ethics.

Failing to do this along with failing to realise that our current risk models are based on tangible physical world assumptions that do not of necessity apply to the non physical intangible information world is a recipe for the same types of failure we currently see.

Thank you for the comment, Clive, but you've completely missed the point. First off, your comments on risk analysis are fatalistic because of the common error of threat centrism. It's a very common fallacy in criticizing risk management, saying that because we can never fully enumerate threats and vulnerabilities, we thus can never fully model risk. This is, of course, bollocks. In order to ensure business survivability, we can take an asset-centric approach (I use the term "asset" broadly here to mean people, processes, and technology) an determine what is most important to continued operations. Focus on how to protect and recover those assets, and to limit losses. I write about this extensively here:

http://www.secureconsulting.net/2011/11/assets-black-swans-and-threats.html

Second, I'm advocating a quality model. My masters degree is in engineering management, and the TEAM Model that's given as an example above was developed during that program. The model has evolved since I first created it in 2005, but the fact still remains that it is absolutely an approach based on engineering principles. Moreover, it seems that you yourself don't understand what you're advocating, since reliability and resilience are key attributes to a quality program. Ergo, I question your entire line of ranting since it's not only internally inconsistent, but actually self-contradictory.

cheers,

-ben

Ben,

I guess you've not read your own article as others have as a stand alone entity, you did not mention a "quality process" in it once (only improving the quality of an outcome and the quality of data). So subsiquently arguing that you actually "advocate a quality model" when you were only talking about reliabilty and risk kind of makes you look like you didn't mean what you had written...

Secondly I was not arguing about which method to use other than quality systems are or should be fundemental to an organisation and reliability in engineering terms is at best part of the design and manufacture process (and thus a very small part of an organisation wide quality system). What I was arguing is that the "current" underlying models used to assess risk have significant flaws and that the models not the risk process is inappropriate in security relating to the topic of discussion (security lifecycle in IT systems).

This can easily be seen with,

"The risk models currently in use by and large cannot handle this sort of risk because of the assumptions underlying the models"

and,

"... failing to realise that our current risk models are based on tangible physical world assumptions that do not of necessity apply o the non physical intangible information world is a recipe for the same ypes of failure we currently see."

and other comments.

Thus I was not in any way arguing against the use or non use of risk assesment only that the current models are too limited because of their underlying assumptions that are based on the normalised behaviour of tangible physical objects constrained by the laws of physics, not intangible information objects that are only constrained by the laws of physics when impressed onto physical objects for storage and communications. That is the current models are way to narrow in scope for historical reasons, and that we are (or should be) aware of this.

So how on earth you can come to the conclusion that I'm commenting,

"... your comments on risk analysis are fatalistic because of the common error of threat centrism. It's a very common fallacy in criticizing risk management, saying that because we can never fully enumerate threats and ulnerabilities, we thus can never fully model risk."

I do not know as I most definatly did not say or imply anything of the sort, nor can those sentiments be drawn from what I have said. Thus you do yourself a significant disservice by trying it as an opening argument. So please do not try and put your pet peves that colour your thinking about how others view your field of endevor into the argument as at best a very lame straw man.

With regards reliability from the engineering perspective, as it is largly based on statistics about the characteristics (MTTF and MTTR) of physical objects that age (which information does not). Even though it is a valid engineering tool for physical objects, it is only valid when it's underlying methods are valid. The time of actuall hard reliability measurments in electronics ended many years ago when individual component MTTF's far exceeded the expected usage life time of the systems they are a part of. Typicaly we have components in use with projected MTTF's of around a 1/2 a million hours (a little over 57 years) whilst the expected time to obsolecence of a system is a little over 13K hours (18months). These projected reliability figures are then plugged into what are in effect risk based models and used to design system architectures where statistical outliers such as juvenile failure can be mittigated. And it is this aspect of reliability or more precisly availability that Dan Geer is interested in. His viewpoint is as I'm sure you are currently aware, that there is little value in further extending MTTF because the engineering costs are prohibitivly high but also the engineering suffers from "imperfect knowledge" on security failure modes. thus we need to concentrate on MTTR for our systems. He has further expounded the view point that "The point of nature is death" and that we should actually design systems especcialy certain types of embedded systems to effectivly die prematurly as a way to kill off currently unknown security flaws. It's a viewpoint I don't tend to agree with except in very limited circumstances especialy when talking about Implanted Electronic Devices (IED's) in medicine such as Pacemakers or Smart Meters (a simple hunt around on various security web sites against my name will give you further background on what I think with regards to this and why).

All of that asside, I did not use what many consider are "explitives" or "intemperate language" in my comments, the fact that you chose to do so is sad because you do yourself a further disservice by doing so.

Now if you wish to argue in a civilised fashion that my premise about the problems with "the inappropriate use of current physicaly based risk models for non physical information", fine I would be more than happy to debate the point, peoples viewpoints are generaly advanced by reasond debate which ripples out into the wider community.

Clive,

I'm not really sure how to respond to your most recent comment. For example, you seem to think engineering reliability only applies to a physical world, which simply isn't true. MTTF and MTTR are great metrics in an IT environment. You can readily calculate them against confidentiality and availability.

Where I think you're still not getting the point here is that I am not applying physical world characteristics, nor am I bound by physical world definitions. The entire objective of the original post is that our approach is at best outdated, and at worst completely faulty to begin with.

I suggest to better understand my perspective you would have to first review and understanding underlying key concepts like networked systems survivability and legal defensibility. When I discuss strategy with clients, I do not start my conversation with "what have implemented to secure your business?" or "what technology do you have in place?" or even "what risk assessment have you done?" Rather, I start from the perspective of "What's most important to your business?" and "What business functions are critical to the ongoing operation and survival of the business?" Starting from that perspective allows for an asset-centric approach (again, assets being used broadly here to reflect people, processes, and technology), which allows us to understand what must then be protected and to what degree. That then leads naturally to a discussion of reliability and resilience (fault tolerance, too), and then can extend back to various practices and technologies.

In this way, "security" becomes antiquated. The business determines what is most critical to business survival, and that is then translated into performance objectives (e.g., SLAs) for Operations, all of which is then monitored and assessed as part of an overall quality & performance management program. As such, the TEAM Model described above is absolutely a quality model, even if I didn't use the word "quality" (there's no rule that requires me to use the word "quality" when describing "quality").

Apologies if you viewed my original response as somehow being uncivilized.

cheers,

-ben

Ben,

With regards,

"... is that our approach is at best outdated, and at worst completely faulty to begin with"

That is a point we both agree on.

With regards,

"For example, you seem to think engineering reliability only applies to a physical world, which simply isn't true"

No I'm saying that the models used in engineering reliability that are based purely on physical world attributes should not be used unless their underlying physical assumptions have been checked to find they are appropriate for the non physical information world. That is the reliability / availability process will be quite amenable to IS and Business usage if the underlying risk models are appropriate. Sadly to many people use the wrong risk models because they are compleatly unaware of the underlying assumptions, and to many books on the subject of reliability just do not go down that far so they will remain in blissful ignorance.

I think you should know that I don't hold with the view of "software engineering" or "security engineering" and I'm known for my opinion that by and large the practitioners are "artisans" carrying out their "trade" not "engineers" performing in their "proffession".

Yes I'm aware it sounds "snobish" but it is not, the study of how Victorian "artisans" became "engineers" by adopting the early scientific principles attributed to Sir Issac Newton that gave rise to "materials science" is an object lesson in why so much goes wrong in Software and Security. In both cases we lack the appropriate metrics to make a scientific study realisticaly possible. If for example you view "software patterns" you will find that the process by which they came about and the process by which they are used are the same as those of Wheel Wrights and early steam boiler makers. In fact the early Victorian approach to engines was to make them in such a way that when things broke you bolted a strengthaning member on, this is by and large the process used in software development for the past fifty years. It is not the process by which engineers practicing in other fields of endeavour carry out their proffession.

Look at it this way much of the industry runs on "Best Practice" but have you ever asked yourself how the best practice actually comes about in the industry? I can tell you for free that most people have not, they are like the blind in the old saying, they simply are lead by others who are blind. One author has refered to the process as "The Hamster Wheel of Pain" and he has a point.

With regards,

"... understanding underlying key concepts like networked systems survivability and legal defensibility."

I am by original training a communications design engineer and moved over to computer engineering when bit slice processors were used to make the ALU's in high performance computers and have "had the joy" of developing the state machines and microcode to turn them into CPU's. I then moved back into communications engineering for High Rel and other systems used in the petro chem industries specialising in command and control of remote systems etc. I've also designed telco equipment both user and network as well as some mil comms systems and encryption kit so I've a passing knowledge in networked systems and survivability in quite a few areas. As for legal defensibility it's an area I'm aware of sufficiently to know the basic pit falls, one of which is why, I find it is best left to those with significant training in the civil and criminal law fields of endevor especially when you practice in many jurisdictions which have very different legal systems.

With regards the "asset centric view" I am continously surprised at how few organisations actually know what their real assets are or how to assess one against another, or even assess their worth.

However one thing I have found is that in the rush to 'be online" or "in the cloud" many organisations really don't know what information they are making available and to whom, and this issue is getting worse with BYOD smart phones etc. Gunner Petereson is a major drum beater for the "asset centric view" and has been for some time. And if you think about it, it is one of the basic premises of physical security where atleast people can see what they are securing and how.

This is partly why I've been a long term proponent that technical staff should get atleast a diploma in Business studies and preferably an MBA so that they can actualy talk the language of walnut corridor without sounding like an alien from out of space. As well as getting a handle on how businesses generaly function so that designs are generaly more appropriate to the business needs.

As for 'In this way, "security" becomes antiquated.' historicaly it always has been. You may not be aware of it, but it is a peculiarity of the English language to have two words for "safety" and "security", in quite a few languages they only have one word that broadly aligns with "safety". The English word "security" is derived from the French word for "safety" in this respect it is much like "beef" for cow flesh, "pork" for pig flesh and "mutton" for sheep flesh. Even the antithesis of security "sabotage" is derived from French and quite litterly means "to put the clog in the machinery" (sabot being French for clog). Part of this is due to the "Courtly use of French" in a similar way to the use of Latin by the church, it was a way for those of status to distinguish them selves from the more common clay of mankind. As the forign words became adopted into the general language that became English as is normal, their meanings became changed in the process.

So "security" is a historical anachronism, but serves to provide a differing perspective to "safety" in terms of a process view rather than a state view, that is security is a process by which safety is achieved. That is not going to change but I agree it should not be a process "in splended issolation" or seen as "an end to it's self", and thus it should become part of an organisational ethos.

Oddly whilst we all usually accept that we are responsable for physical security thus we shut windows and lock draws and doors when we leave an area or building, for some reason we have allowed the abdication of such responsability in IS/ICT activities thus having a "security team" is much like having a private police force and we have seen the attendent failings of such within organisations.

However under "securities" all encompasing embace there are some very distinct specialist endevors which you cannot expect ordinary developers to be au fait with, thus you will need specialists. The question is how they should form part of the development process and associated teams, should they be a "driver" or an "available resource". If the latter they will almost certainly be used "late in the game" and end up doing little more than "code review" and as such achieving little. As I've indicated they need to be activly involved with a project before day zero so that they influence the entire design. Security when built in properly usually has a marginal overhead, however when added as an after thought it is far from marginal and clings on like a malignant growth causing all sorts of other issues. In this respect it is as the Victorian artisans did after a new engine was found to be deficient, they just bolted or riveted a correcting or strengthaning piece on often markadly changing the performance of the engine in the process.

Clive,

A few brief responses...

1) I'm not a proponent of anything deemed "best practice" for reasons similar to your own. Contextless "advice" like that can oftentimes be as damaging as it is helpful. I've touched on this topic in this blog a few times:

http://www.secureconsulting.net/2010/06/best-practice-youre-saying-it.html

http://www.secureconsulting.net/2012/03/inevitable-devolution-of-stds.html

http://www.secureconsulting.net/Papers/Tomhave-Architecting_Adequacy.pdf

2) I agree that there is far more art than science today, but that doesn't prohibit us from describing our state as proto-science, which is the early formative stage of formalization. I think we have the potential to know much more about our environments than people like yourself and Donn Parker have granted. I think the metrics (like MTTF and MTTR) are easily recorded and tracked, and are useful. Similarly, given an asset-centric approach, I think we can know what is at risk and what the relative value is of those assets, thus allowing us to chart a more sane course. Unfortunately, today our businesses have no incentive (financial or legal) to pursue such a course, and thus things stagnate. While the attackers rapidly evolve their attacks, the defenders still stick with (as Gunnar Peterson often notes) SSL, firewalls, and AV. It's a truly sad state of affairs. Incidentally, I have another blog piece in draft about how to unbalance the culture in order to move the needle... it should hopefully be written this week if I can get some work writing completed first.

3) I also agree with your points on "security" as a term and concept. This is indeed largely why I think the term needs to be flushed from our vocabulary. It's a crutch that's holding us back. The etymology is interesting, but I think the in-context history is a bit different. This is, alas, another blog post in development, but suffice to say that the same arrogance that created separation between business and IT in the first place ("those idiots don't know the first thing about computers!") is the same arrogance that led to the creation of dedicated "security" teams ("those idiots don't know the first thing about security!"). In part, there's a valid argument for pulling out these duties in order to organize and optimize them, but that should be a short-term practice, not a long-term situation. Ergo, I think we're nearing the end of the usefulness for dedicated "security" teams and need to de-operationalize those practices, converting them to GRC teams that are themselves elevated in the organization and merged with other business GRC teams.

cheers,

-ben